Going beyond Visualization: Verbalization as Complementary Medium to Explain Machine Learning Models

Abstract

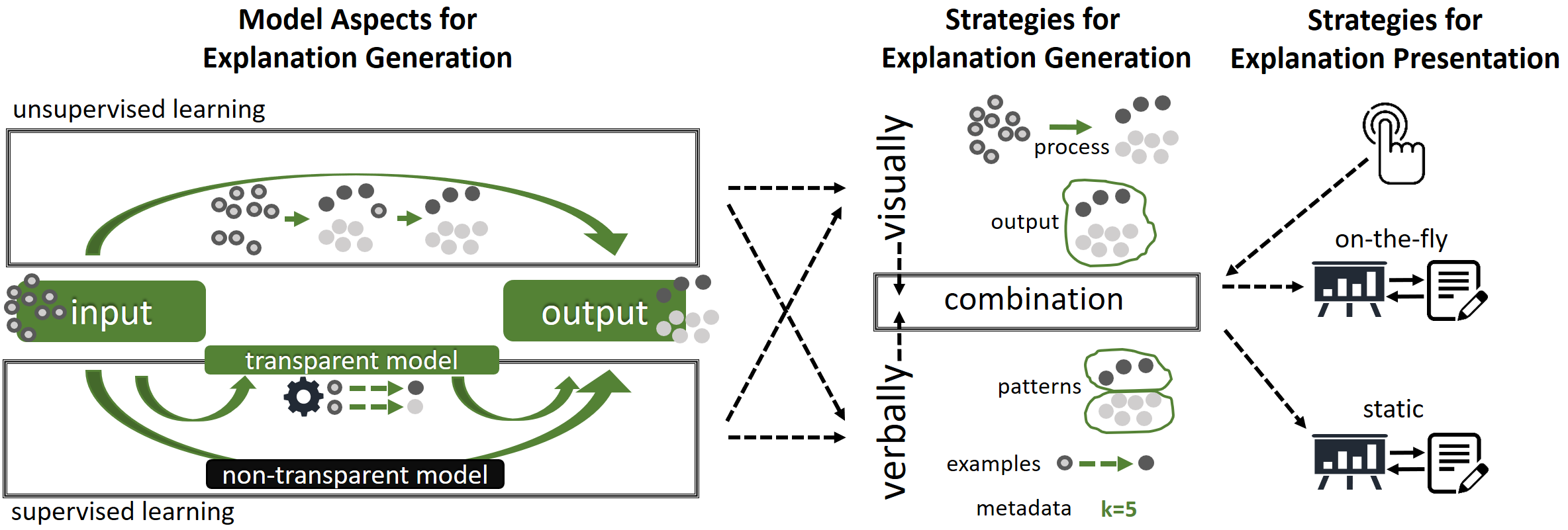

In this position paper, we argue that a combination of visualization and verbalization techniques is beneficial to create broad and versatile insights into the structure and decision-making processes of machine learning models. Explainability of machine learning models is emerging as an important area of research. Hence, insights into the inner workings of a trained model allow users and analysts, alike, to understand the models, develop justifications, and gain trust in the systems they inform. Explanations can be generated through different types of mediums, such as visualizations and verbalizations. Both are powerful tools that enable model interpretability. However, while their combination is arguably more powerful than each medium separately, they are currently applied and researched independently. To support our position that the combination of the two techniques is beneficial to explain machine learning models, we describe the design space of such a combination and discuss arising research questions, gaps, and opportunities.